PostgreSQL Database

This workflow allows you to ingest data from a PostgreSQL database for which you have credentials.

Creating the workflow

For ingesting, you first will need to gather some credentials and figure out a little about the data.

Gathering Credentials

The credentials you will need are:

- Hostname

- Port

- Username

- Password

- Database

The host must be accessible on the internet. You can check this by testing your

credentials using psql or pgAdmin from a system on a home internet connection.

If you don't have a port, it's probably the default (5432) - You won't need to add it.

Determining What to Ingest

If you don't know the layout of your database, don't worry — you can just accept the defaults for the rest. If things don't work out like you'd hope, resetting will allow you to just try again from scratch.

If you have a table that you know has images or PDFs in it, you can specify those when starting the workflow.

If there are any issues with tables that have images or PDFs, see Troubleshooting

Starting the workflow

![[object Object]](/assets/images/configure_ingest_from_sql-5f00e6d32b386b7308ddea4ae99c6de7.png)

- Enter the domain name for the PostgreSQL host

- Enter the name of the database

- Enter the port number or leave it at the default of 5432

- Enter the SQL user name

- Enter the SQL password; this will not be shown on-screen

- Optionally supply a comma-delimited list of tables that reference images

- Optionally supply a comma-delimited list of tables reference PDFs

- Optionally supply a comma-delimited list of tables that should be ignored

- Optionally supply a comma-delimited list of columns that should be ignored

- Finally, press the big blue button at the bottom

See the results

Results will start being available in your database as soon as your workflow status is 'Started'.

To view data you have ingested, go to the Web UI for your instance.

Resetting

If data wasn't imported in the way you want, for example if an image table was imported as entities, you can simply remove all the data that this workflow ingested from a specific host and database. The following query will retrieve the keys for runs:

[

{

"FindEntity": {

"_ref":1,

"with_class":"WorkflowSpec",

"constraints": {

"workflow_name": [

"==",

"sql-loader"

]

}

}

},{

"FindEntity":{

"is_connected_to": {"ref":1},

"results" : {

"list": ["workflow_id"]

}

}

}]

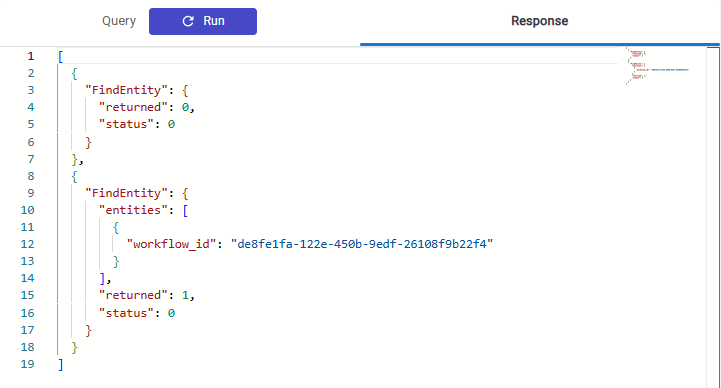

This is an example of what the results will look like:

The result for workflow_id is what you are looking for. Save it, and replace

YOUR_ID_HERE with it in the query below.

Then, you can run a query to remove all Entities, Images and Blobs that were ingested by it:

[{

"FindEntity": {

"_ref":1,

"with_class": "WorkflowRun",

"constraints": { "workflow_id": [ "==", YOUR_ID_HERE ] }

}

},{

"FindEntity": {

"_ref":2,

"is_connected_to": { "ref":1 }

}

},{

"FindImage": {

"_ref":3,

"is_connected_to": { "ref":1 }

}

},{

"FindBlob": {

"_ref":4,

"is_connected_to": { "ref":1 }

}

},{

"DeleteEntity": {

"ref":2

}

},{

"DeleteImage": {

"ref":3

}

},{

"DeleteBlob": {

"ref":4

}

}]

It will show "count" for all objects deleted. If none were, make sure you entered the workflow_id correctly, or that you didn't already delete everything!

Troubleshooting

If the workflow fails to load any data, first ensure a few things:

- the hostname is spelled correctly

- the server can be accessed from the network where the workflow is hosted (e.g. Google Cloud)

- the database name is correct

- the username and password are correct

- the user has the permissions required to access the database

If a image or PDF table fails to ingest, check that it does have a blob ( BYTEA

in PostgreSQL ) column.

If problems persist, join our Slack workspace for additional help.