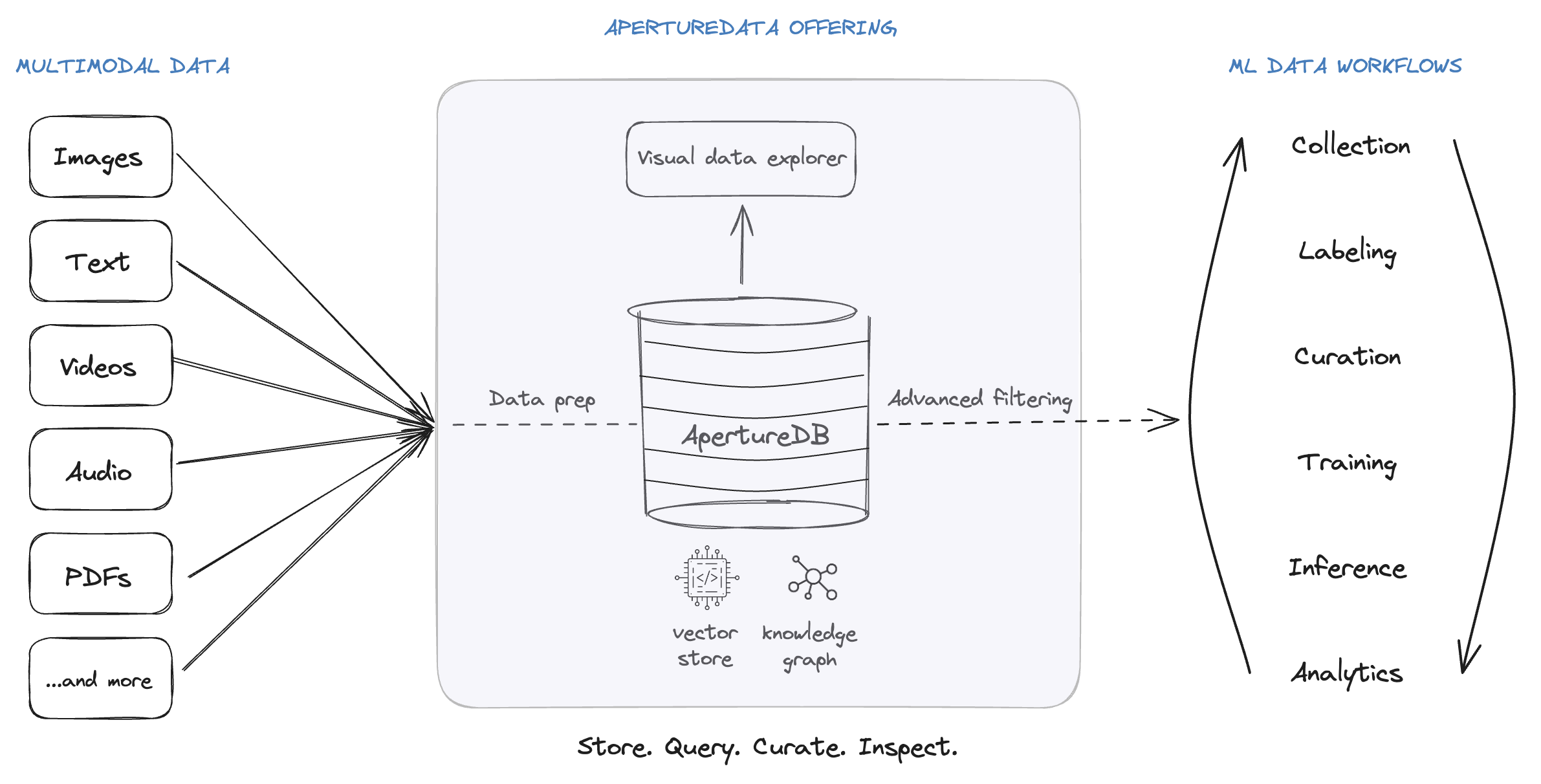

Multimodal Data

Yes, ApertureDB indeed allows users to store multimodal data like text, images, documents, audio, video, and other unstructured data types. The metadata in the graph database or the embeddings in the vector database within ApertureDB are typically derived from these sources of data or provide searchable criteria to find the right data.

As of now, ApertureDB has advanced support for images and videos while other data types can be added and retrieved as blobs.

Everytime a user adds or stores one of these data types or objects in ApertureDB they create a node in the graph that represents user-provided (e.g. timestamp, author, location), automatically extracted (e.g. bounding boxes), or some internal (e.g. image height or width) attributes about that object. Each of this object can be connected with existing or any new objects added to the database making them part of the knowledge graph.