Interact with PyTorch Objects

This notebook illustrates a few ways in which you can work with PyTorch and ApertureDB.

Prerequisites:

- Access to an ApertureDB instance.

- aperturedb-python installed. (note that pytorch gets pulled in as dependency of aperturedb)

- COCO dataset files downloaded. We will use the validation set in the following cells.

- If run in colab, the ApertureDB client needs to be setup to point to your instance.

Install Resources to run this example.

# Get the resources for the coco dataset

!mkdir coco

#Validation images

!cd coco && wget http://images.cocodataset.org/zips/val2017.zip && unzip -qq val2017.zip

# Annotations

!cd coco && wget http://images.cocodataset.org/annotations/annotations_trainval2017.zip && unzip -qq annotations_trainval2017.zip

# Get the CocoDataPytorch from aperturedb examples.

!wget https://github.com/aperture-data/aperturedb-python/raw/refs/heads/develop/examples/CocoDataPyTorch.py

--2024-09-26 14:56:03-- http://images.cocodataset.org/zips/val2017.zip

Resolving images.cocodataset.org (images.cocodataset.org)... 3.5.28.122, 52.216.50.9, 3.5.2.50, ...

Connecting to images.cocodataset.org (images.cocodataset.org)|3.5.28.122|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 815585330 (778M) [application/zip]

Saving to: ‘val2017.zip’

val2017.zip 100%[===================>] 777.80M 15.6MB/s in 37s

2024-09-26 14:56:40 (21.1 MB/s) - ‘val2017.zip’ saved [815585330/815585330]

--2024-09-26 14:56:45-- http://images.cocodataset.org/annotations/annotations_trainval2017.zip

Resolving images.cocodataset.org (images.cocodataset.org)... 52.216.152.124, 54.231.132.81, 3.5.31.140, ...

Connecting to images.cocodataset.org (images.cocodataset.org)|52.216.152.124|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 252907541 (241M) [application/zip]

Saving to: ‘annotations_trainval2017.zip’

annotations_trainva 100%[===================>] 241.19M 20.3MB/s in 12s

2024-09-26 14:56:57 (20.3 MB/s) - ‘annotations_trainval2017.zip’ saved [252907541/252907541]

--2024-09-26 14:57:03-- https://github.com/aperture-data/aperturedb-python/raw/refs/heads/develop/examples/CocoDataPyTorch.py

Resolving github.com (github.com)... 140.82.113.4

Connecting to github.com (github.com)|140.82.113.4|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://raw.githubusercontent.com/aperture-data/aperturedb-python/refs/heads/develop/examples/CocoDataPyTorch.py [following]

--2024-09-26 14:57:03-- https://raw.githubusercontent.com/aperture-data/aperturedb-python/refs/heads/develop/examples/CocoDataPyTorch.py

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.109.133, 185.199.110.133, 185.199.111.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.109.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 4265 (4.2K) [text/plain]

Saving to: ‘CocoDataPyTorch.py.1’

CocoDataPyTorch.py. 100%[===================>] 4.17K --.-KB/s in 0s

2024-09-26 14:57:03 (24.2 MB/s) - ‘CocoDataPyTorch.py.1’ saved [4265/4265]

Steps

Load PyTorch dataset into ApertureDB

This step uses a PyTorch CocoDetection dataset, and ingests it into ApertureDB.

To handle the semantics for ApertureDB, a class CocoDataPyTorch is implemented.

It uses aperturedb.PytorchData as a base class, and implements a method

generate_query which translates the data as it is represented in CocoDetection (a PyTorch dataset object)

into the corresponding queries for ApertureDB.

from aperturedb.ParallelLoader import ParallelLoader

from CocoDataPyTorch import CocoDataPyTorch

from aperturedb.CommonLibrary import create_connector

client = create_connector()

loader = ParallelLoader(client)

coco_detection = CocoDataPyTorch(dataset_name = "coco_validation_with_annotations")

# Lets use 100 images from CocoDataPyTorch object which have annotations for the purpose of the demo

# Ingesting all of them might be time consuming

images = []

for t in coco_detection:

X, y = t

if len(y) > 0:

images.append(t)

if len(images) == 100:

break

loader.ingest(images, stats=True)

loading annotations into memory...

Done (t=0.43s)

creating index...

index created!

Progress: 100.00% - ETA(s): 0.78

============ ApertureDB Loader Stats ============

Total time (s): 1.6587610244750977

Total queries executed: 100

Avg Query time (s): 0.06576182365417481

Query time std: 0.20419635779095394

Avg Query Throughput (q/s): 60.82556379572154

Overall insertion throughput (element/s): 60.28595953515621

Total inserted elements: 100

Total successful commands: 542

=================================================

Note that we ingested first 100 images which were annotated.

Inspect a sample of the data that has been ingested into ApertureDB

from aperturedb import Images

import pandas as pd

from aperturedb.CommonLibrary import create_connector

client = create_connector()

images = Images.Images(client)

constraints = Images.Constraints()

constraints.equal("dataset_name", "coco_validation_with_annotations")

images.search(limit=5, constraints=constraints)

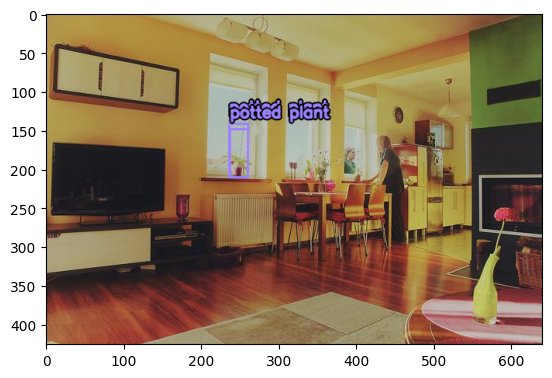

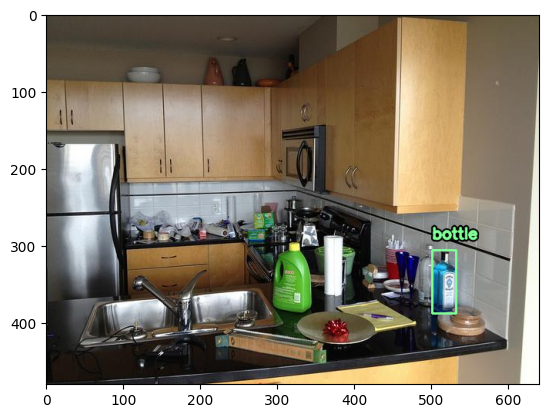

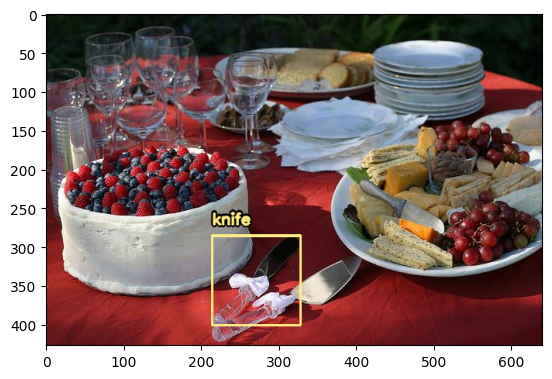

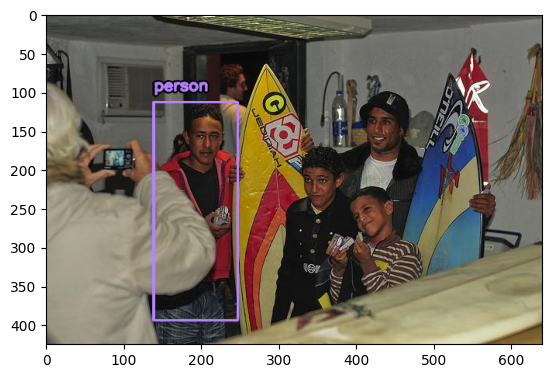

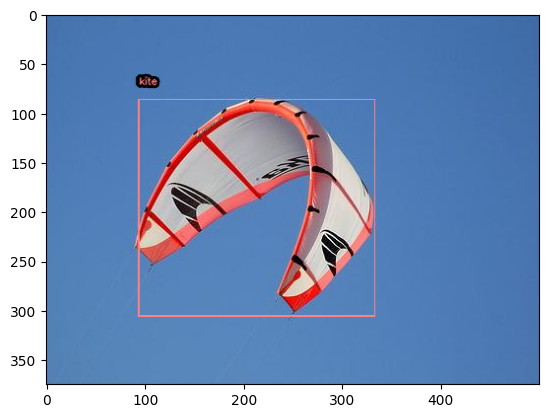

images.display(show_bboxes=True)

pd.json_normalize(images.get_properties(images.get_props_names()).values())

| area | bbox | category_id | dataset_name | id | image_id | iscrowd | segmentation | |

|---|---|---|---|---|---|---|---|---|

| 0 | 531.80710 | 236.98 142.51 24.7 69.5 | 64 | coco_validation_with_annotations | 26547 | 139 | 0 | [240.86, 211.31, 240.16, 197.19, 236.98, 192.2... |

| 1 | 2039.87725 | 501.24 306.49 31.96 82.64 | 44 | coco_validation_with_annotations | 88785 | 7574 | 0 | [513.16, 308.53, 513.0, 320.13, 502.4, 330.06,... |

| 2 | 3399.55890 | 214.48 285.49 114.18 116.49 | 49 | coco_validation_with_annotations | 696112 | 2157 | 0 | [267.75, 340.3, 270.58, 332.09, 276.82, 321.47... |

| 3 | 14333.69630 | 138.8 113.43 109.12 281.42 | 1 | coco_validation_with_annotations | 457532 | 5193 | 0 | [145.5, 194.79, 163.68, 190.96, 166.55, 180.43... |

| 4 | 25232.73910 | 93.96 86.13 240.41 220.09 | 38 | coco_validation_with_annotations | 623269 | 7784 | 0 | [109.2, 252.89, 93.96, 239.35, 116.82, 161.47,... |

Use data from ApertureDB in a PyTorch DataLoader

The list of elements/elements that can be queried from ApertureDB can be used as a dataset, which in turn can be used by the PyTorch data loader. The following example uses a subset of data for the same purpose.

from aperturedb import Images

from aperturedb import PyTorchDataset

import time

from IPython.display import Image, display

import cv2

from aperturedb.CommonLibrary import create_connector

client = create_connector()

query = [{

"FindImage": {

"constraints": {

"dataset_name": ["==", "coco_validation_with_annotations"]

},

"blobs": True

}

}]

dataset = PyTorchDataset.ApertureDBDataset(client, query)

print("Total Images in dataloader:", len(dataset))

start = time.time()

# Iterate over dataset.

for i, img in enumerate(dataset):

if i >= 5:

break

# img[0] is a decoded, cv2 image

converted = cv2.cvtColor(img[0], cv2.COLOR_BGR2RGB)

encoded = cv2.imencode(ext=".jpeg", img=converted)[1]

ipyimage = Image(data=encoded, format="JPEG")

display(ipyimage)

print("Throughput (imgs/s):", len(dataset) / (time.time() - start))

Total Images in dataloader: 100

Throughput (imgs/s): 1021.0336181503932

Create a dataset with resized images

More details for writing custom constraints and operations when finding images.

query = [{

"FindImage": {

"constraints": {

"dataset_name": ["==", "coco_validation_with_annotations"]

},

"operations": [

{

"type": "resize",

"width": 224

}

],

"blobs": True

}

}]

dataset = PyTorchDataset.ApertureDBDataset(client, query)

print("Total Images in dataloader:", len(dataset))

start = time.time()

# Iterate over dataset.

for i, img in enumerate(dataset):

if i >= 5:

break

# img[0] is a decoded, cv2 image

converted = cv2.cvtColor(img[0], cv2.COLOR_BGR2RGB)

encoded = cv2.imencode(ext=".jpeg", img=converted)[1]

ipyimage = Image(data=encoded, format="JPEG")

display(ipyimage)

print("Throughput (imgs/s):", len(dataset) / (time.time() - start))

Total Images in dataloader: 100

Throughput (imgs/s): 231.35447843109566

Create a DataLoader from the dataset to use in other PyTorch methods.

from torch.utils.data import DataLoader

dl = DataLoader(dataset=dataset)

# dl is a torch.utils.data.DataLoader object, which can be used in PyTorch

# https://pytorch.org/tutorials/beginner/basics/data_tutorial.html

len(dl)

100

The PyTorch DataLoader (dl) has all the interfaces to batch, shuffle and multiprocess this dataset in the remainder of the pipeline